-

ABM Technology Ecosystem

This week, my business partner B2B Marketing held its annual ABM Forum in London and, leading up to the conference, we have been working on a new edition of our Martech Spotlight Report for that topic. As always, my role in the B2B Marketing team is to present an overview of the vendor landscape and profile the most important vendors.

I recall way back in 2016 writing a blog at Forrester titled something like “ABM: Let’s move from Cacophony to Euphony”. ABM was being used in reams of promotional copy distributed by marketing consultancies, data service providers, and software automation vendors alike, but it was not being clearly defined by any of these actors. ABM ended up meaning different things to different people. And this has not really changed — my most recent survey for Research In Action found that four out of five found ABM effectiveness falls short of their expectations. So much for 8 years of marketing spend by all those vendors!

In our 2016 Forrester ABM landscape report, we wrote that many organizations may not have to buy anything at all for their first ABM initiative; it is more than likely that they already have automation in place that they can deploy. We also called out the fact that there are other potentially useful solutions that haven’t (yet) incorporated the ABM moniker into their marketing messaging.

So, one important difference in the new edition of the Martech Spotlight Report for ABM is that we have expanded the landscape extensively – it is not only those vendors who use the term in their messaging. We have created what we call an ABM Technology Ecosystem which is based on the overall ABM process. This is how we see the ABM process:

- You Build Your Audience. Here, you need CRM tools and perhaps Data Enrichment tools.

- You Research the Accounts you’re targeting. For which you need Intent and Predictive Analytics tools.

- Then you map out account strategies/campaigns. Deploying Orchestration and even Sales and Marketing Alignment Platforms.

- For your engagement with accounts, you will use Content, Event Management platforms. More advanced ABM teams will also deploy Personalization and Sales Enablement tools.

- In the Campaign Execution or Convert phase, you will use your Marketing Automation platform and perhaps also Data Visualization and Programmatic Ad platforms.

- Do measure your ABM success, you’ll need to use Analytics tools, even ROMI Dashboards or Visitor Intelligence Software.

That is a total of 16 different martech categories involved., each with their own landscape of vendors. All of which means that I needed to add some 35 new vendor profiles to the report as well as updating the 25 vendor profiles from the 2023 report. The overall Ecosystem graphic was presented at the ABM Forum while the full report will come out in December.

In a Martech Vendor Spotlight, we score each vendor for four important criteria, which we see as being critically important to potential buyer teams when evaluating and shortlisting vendors. The stress is on CUSTOMER SUCCESS more than individual product features, so the criteria are:

- Market Momentum. Here, we have assessed how well a vendor helps prospective buyers to understand the solution offering and how it fits into their environment.

- Customer Focus. Almost all software solutions are now delivered as-a-service and the most successful SaaS vendors are those who help their clients on an ongoing basis, not just in response to support calls.

- Price vs Value. As with any business investment, marketing executives need to be assured that the investments they make in technology are providing an appropriate payback.

- Implementation Success. The true test of a business partnership is the commitment from a vendor to supporting the integration of their system with whatever the client has in place already.

We score each criterion as Strong, Good, Medium, or Low.

Write me if you would like to get more details of the vendor evaluations.

Always keeping you informed! Peter

-

New Vendor Spotlight

As the resident “Lead Analyst” within their Propolis service, I’ve been working with the B2B Marketing organization for many years now. Launched in March 2021 as an exclusive digital community for B2B marketers, Propolis has collected, by design, a very diverse membership, not just marketing executives but entire marketing teams in companies of all sizes.

I think that Propolis has proven to be nothing less than a game changer for B2B marketing as an industry, as a profession, and most of all as a community. After all, the way that business professionals want to consume and discuss industry and disciplinary trends has changed to become much more:

- Digital. Meaning that there is interactivity, not just website documents.

- Democratized. Where all job levels can afford to benefit from the information.

- Discussion-based. Where peer inputs are valued just as much as the so-called experts.

In the next weeks we will be extending our coverage and publishing our first B2B Marketing Martech Vendor Spotlight report, this edition focused on vendors supporting Account-Based Marketing (ABM).

When I was asked to design and research the Spotlight report, I realized that here was a great chance to create something different than the classical research analyst reports available so far. Those waves and quadrants score and compare the vendors based primarily on their product offering. My experience helping B2B marketers in their vendor selection process (I often recommend using Design Thinking) has been that the supplier itself is equally, or perhaps even more, the focus of attention and evaluation. No, the make-or-break questions that I get asked in my workshops are more about how a vendor will work with them as a client:

- “Will they help us to set up and run the solution?”

- “How do they react when something goes wrong?”

- “Do they have programmes to help ensure we get a return on our investment?”

So, first we select and spotlight those vendors we feel are most relevant and important for the Propolis members (a mix of both large enterprise and small-medium sized businesses). Then we profile each vendor and score it on four criteria relevant to the topics listed above. We also field a detailed vendor questionnaire asking about the resources in place to ensure successful implementation, integration, user adoption, and even value management of their ABM solution. Not every vendor responds and completes the survey (yet – as this is the first report after all) but my 20+ years of working at META Group, Gartner and Forrester Research, plus seven years of user surveys and report writing for Research In Action, is experience enough for me to craft and score all the profile pages.

These four criteria are critically important to potential buyer teams when evaluating and shortlisting vendors and the stress being on CUSTOMER SUCCESS more than individual product features:

• Market Momentum. Here, we have assessed how well a vendor helps prospective buyers to understand the solution offering and how it fits into their environment.

• Customer Focus. Almost all software solutions are now delivered as-a-service and the most successful SaaS vendors are those who help their clients on an ongoing basis, not just in response to support calls.

• Price vs Value. As with any business investment, marketing executives need to be assured that the investments they make in technology are providing an appropriate payback.

• Implementation Success. The true test of a business partnership is the commitment from a vendor to supporting the integration of their system with whatever the client has in place already.

We score each criterion as Strong, Good, Medium, or Low.

The B2B Marketing Martech Vendor Spotlight Report on ABM will be published to Propolis clients during the The Global ABM Conference from B2B Marketing in London in November. Further Spotlights will follow over the next year covering topics like Marketing Operations, Marketing Resources/Asset Management, Digital Content Management, Digital Experience, Customer Data Management, Digital Event Management.

Always keeping you informed! Peter

-

Design Thinking in The Vendor Selection Process

Many years ago, working at HP, I quickly learned to schedule my vacations according to the marketplace. Common practice was, when customers (well, prospects) went on their vacation, they first dumped some work on my colleagues and myself, sending us a thick envelope (no Email in those days) containing a “Request for Proposal” or even worse (sounds so uncommitted!) a “Request for Information” — long, detailed documents laying out a series of specifications and functions that they wanted to see in our product.

We’d be expected to process/answer many detailed questions and submit a response when they came back from vacation. Most RFPs were issued, especially here in Germany, during the summer and just before Christmas.

I got the impression that creating these RFP documents, and then processing the vendor replies, was the main event for many buyers. It wasn’t necessarily about picking the right solution. The later stages (presentation, demo, negotiation, sales) seemed to happen very quickly afterwards.

Further work experience also taught me that the famous adage that “70% of IT projects fail” is very true and continues to be so. I would suggest that one reason for this is the above process. Many companies assume that the most important component of any process automation project is the Vendor Selection Process (VSP). Once that’s done, it is easy sailing – just install it, configure it, (perhaps) train the users and run the system.

Well, I’ve now assisted many a client through their VSP and sat in on their meetings with potential suppliers to provide my input as “an outsider”. I trust that my assessment of the vendors’ offerings and potential to fit into their planned technical architecture was useful. But still, I’ve often left the meetings with the feeling that the client wasn’t really prepared for the full project. I would notice that many aspects of the project were not yet thought through. There were often:

- No sample business workflows (much of which is outside the software they’ll buy)

- No profile of their potential users (devices, competencies, preferences)

- No sample reports or dashboards designed

- No prioritization in their list of requirements – all was equally important.

Process automation projects fail because of a bad fit between project solution and requirements. And when I say “project” I mean much more than the software product. The solution must cover the complete business scenario to be improved, which is usually only partly through technology – process and organization always needs to be tuned as well.

I suggest that it is now time to reconsider the role of the VSP – it should not be “the means to an end” – better to turn it into the kick-off for a process transformation project.

In 2009, the Hasso Plattner Institute of Design at Stanford came up with the concept of “design thinking” which has been adopted by many IT organizations and software vendors as the basis for their development projects. The associated meeting/communications method, SCRUM, has now even been adopted by modern marketing departments. The Stanford School process proposes these steps in a project:

Empathize – Define – Ideate – Prototype – Test.

So here is what I envisage in a modern marketing process automation project:

Empathize. Collect and describe the requirements based not on technical specifications but by describing real business scenarios – improved workflows that marketers care about. Include persona profiles and the desired “usage tone” (marketing- or IT-centric, advanced or casual user, terminology known or not, device preferred, location of task, reporting requirements, millennials!). A scenario documentation should resemble the briefings given to marketing agencies – not an RFP spreadsheet.

Define. Based on the make-up of the user-team and other requirements such as integrations and services, you should be able to easily segment the vendors and arrive at a shortlist. Provide the scenario documentation to those vendors and gather their responses as a first selection phase. Allow them to be creative – they may even be able to propose process improvements that you had not yet identified.

Ideate. Invest time here to engage with three to five vendors to explore how they would help you to automate the scenarios. If you want to restrict this phase, limit how many scenarios each vendor works on – one will probably suffice for you to form an impression of the vendor’s suitability as a business partner.

Prototype. The people at Stanford would love you to be putting Post-It notes on the wall in this phase, but you should probably expect your vendors to be able to demonstrate how they would support your scenarios with their software. You should now be down to one or perhaps two vendors. As well as checking whether they have realistic expectations, also use this phase to observe how the project members will work together – vendor people with your colleagues but perhaps you are also bringing together colleagues who are strange to each other. Create a conflict situation by changing a scenario and see how all players react.

Test. After selecting your technology provider, you now move into the project roll-out phase, which is usually focused on just one team, location, or business area to generate success and then a more expansive roll-out. Continue to expect the vendor to treat you as a business partner and working to ensure your success.

The test phase should never end. Wise project managers will maintain a running, live doc of the business requirements, because they’ll change over time. Display it in a flexible and editable spot to allow you to constantly re-check what you need, and the costs associated with it. Also, ask yourself periodically what can you cut? Or what hasn’t been used in months? Who is now using the software – is that different than initially assumed?

Something to think about the next time you plan an automation project.

Always keeping you informed! Peter O’Neill

-

Some Background to my Vendor Research

If you are reading this, then I assume that you’ve looked at a couple of my Vendor Selection Matrixtm reports and are thinking … they look like magic quadrants or waves but they seem to be different… Well, they certainly are – in more ways than one !

I do this work with my business partner Research In Action and mine are written for marketing software buyers who need to automate one or more important marketing processes and are researching which vendors COULD provide the software their business will require for optimal functionality and strategy.

They’re likely to be calling the project something close to the process(es) being automated and improved, but there is no guarantee that the vendors will be using that terminology when describing their products.

I design my projects around the process name I think Marketers would use and survey businesses on their experience. Often, that collects a landscape of vendors using different technology labels but that is the reality.

All in all, there is a multitude of vendor report types out there. On one end of the spectrum, you have the Analyst Reports with industry analyst expertise and in-depth research. On the other end, we have Crowd-Sourced Reports in which rankings are driven by the quality and quantity of user reviews.

Analyst Reports

Pros: The “Tier One” industry analysts doing this work are experts in their field and seriously know their stuff. They sit through strategy and product presentations/demos and some even get feedback from referenced customers. Vendors must invest days of time and resources to provide the right information to the analyst. Of course, many also sign up as clients and engage with the analyst on an ongoing basis to optimize the relationship.

Spoiler Alert: In my time as Research Director at Forrester, I had an analyst in my team who consulted specifically on how to execute the process of Analyst Relations (it’s part of B2B Marketing after all) – including how to get yourself placed in an optimal position in a quadrant or wave analysis.

Cons: The Analyst Report is written for the research firm’s clients, usually large enterprises – which influences the list of vendors include, of course. These are smaller audiences than is often assumed. Usually, the readership of each report behind their paywall is perhaps in the hundreds – one vendor client told me that the latest two reports where his product was featured had 480 and just 58 views on the research website.

That can be a little depressing not only for the vendor but also for the analyst – all that work and so little attention! Of course, the brand power, and resulting product-marketing ego, of being in a “Magic Quadrant” or “Forrester Wave” means that some vendors buy reprint-licenses and offer a download of the report through their website. And they book the analyst to make speeches/webinars about the research – a little show business that compensates for the initial disappointment perhaps.

Crowd-Sourced Reports

Pros: It’s always helpful to seek out feedback from other users; peers who share the good, bad, and the ugly about a product. There are several such feedback websites now up and running for all types of software applications, including marketing.

Cons: Have you ever looked up your favourite restaurant on Yelp, noticed a few one-star reviews, and wondered how they could come to such contrasting conclusions? A single review (good or bad) shouldn’t dictate your software-buying decision, just like with any other product. Remember: User opinions have varying levels of actual marketing automation understanding – just because someone writes a review does not make them an expert in the field.

Additionally, report rankings are driven by the quality and quantity of user reviews. If a company has a few hundred reviews with a high rating average, and another has a few thousand reviews with above-average ratings, it is likely the latter will position better in the report due to the sheer number of reviews. This is a huge advantage for larger vendors that have been on the market for a long time, and it’s likely they have review incentive programs to boost their ranking.

Research In Action Reports Have Both Perspectives

The methodology at Research In Action is that we first survey 1,500 practitioners about THEIR view of automating the process(es) in questions. And then we ask them to name one or two vendors they associate with the project and give us feedback on the vendor’s product, service, value-for-money, and ability to innovate. The vendors who score highly enough in the survey get into the Vendor Selection Matrixtm report in the first place (usually 15 to 20 vendors).

Then, that curated market feedback is seasoned with a touch of industry analyst’s (that’s me) expertise to provide a more well-rounded recipe for successful vendor selection. In fact, much more than the quadrant or wave reports, these reports are embellished with several pages of trends insights that inform both buyers and vendors alike about what is most important when investing in the upcoming project.

Research In Action Reports are Widely Read

When Research In Action publishes its reports, they are made available to several communities:

- Survey respondents. The 1,500 marketing software decision-makers who answered the survey questions are provided with the full report as feedback

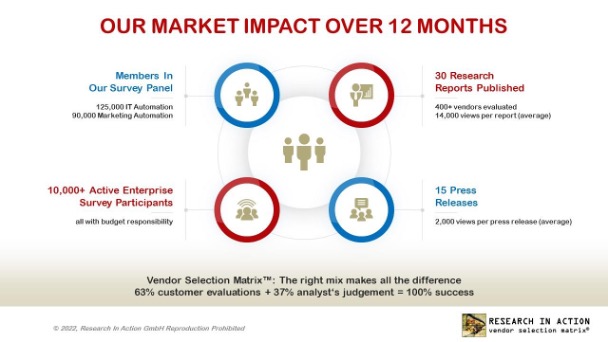

- Survey panel. Research In Action maintains an active survey panel on a global basis with contact details and topics of interest: a current total of 125,000 IT Automation decision-makers and 90,000 Marketing Automation decision-makers. These panel members are informed of the report and can download it if desired

- Website visitors. Any viewers of the Research In Action website sees a “public version” without the exact scores and matrix placements of each vendor (to save their embarrassment) but with all insights and the most important facts on each vendor.

- Vendor reprints. Research In Action does also license reprints, where a vendor can distribute a copy of the report, with their detailed profile, to interested parties.

On average, each report gets tens of thousands of clicks on our website. Personally, I am quite proud that so many people now get to see my work. And, when I am booked to do speeches and webinars, I know they are booking me personally, not the brand power.

Our work really does fill that gap between an industry analyst report focused on large enterprise needs, and the “trip-advisor” type of review websites. They also reach and assist a broader community of software buyers. Lastly, the community reading the reports is probably a whole order of magnitude higher than the audience able to access the “Tier One” research reports. Here is our latest Market Impact statistics chart.

Always keeping you informed! Peter